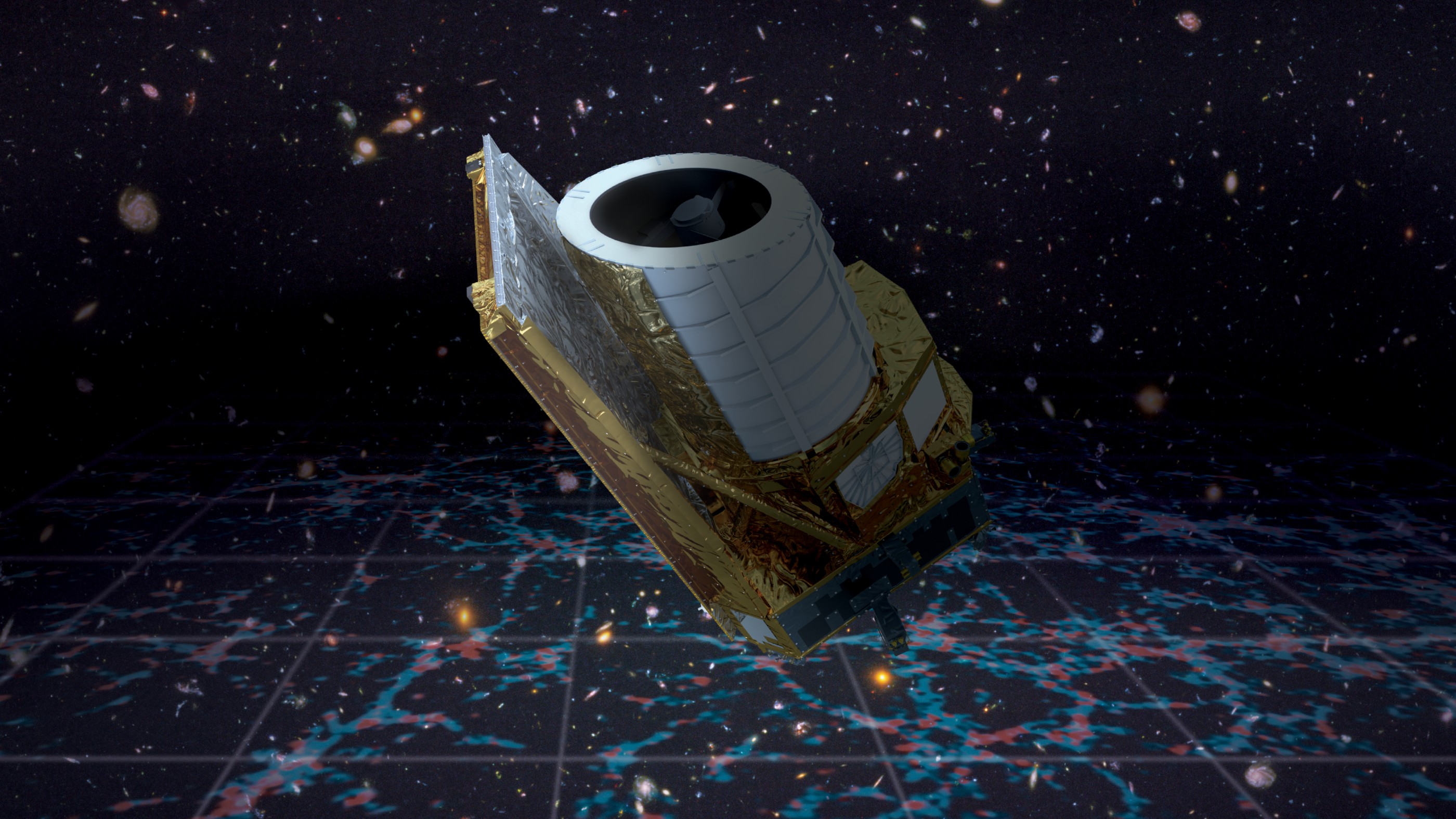

Today, at exactly 17.11 CEST, the European Space Agency’s newest mission was launched in a SpaceX Falcon 9 rocket from Cape Canaveral in Florida, USA. Called Euclid, the 2-tonne, 4.5-m-tall and 3.1-m-in-diameter space telescope will be used to map the geometry of the Universe, in particular to explore the nature of dark matter and dark energy. Euclid has been a CERN-recognised experiment since 2015 and will use key software and computing infrastructure provided by CERN to process vast amounts of data.

Understanding the evolution of the Universe is a fundamental challenge in modern physics. Astronomical observations show that the Universe’s rate of expansion is not constant, and scientists believe that dark energy could be the culprit, while dark matter governs the large-scale structure of the Universe. As their names suggest, dark matter and dark energy are “invisible” to current telescopes, because they do not interact with light in the way that normal – or “visible” – matter does. Scientists instead use telescopes like Euclid to look for their effects on observable matter, such as measuring their redshifts to study the tiny deformations of galaxy shapes and the distribution of galaxies over space and time. Euclid will be the most comprehensive investigation to date, scanning optical light from billions of galaxies up to 10 billion light years away, covering almost a third of the sky. The aim is to create a map through time and space of the large-scale structure of the Universe.

To do this, the mission requires vast amounts of data and data-processing capabilities. This is where CERN, which is used to processing and storing data from millions of high-energy particle collisions per second, comes in. CERN is involved in the Euclid programme’s science ground segment (SGS). The SGS will process and analyse Euclid data and merge it with data from ground-based telescopes to study the properties of dark energy and dark matter.

The SGS will process over 850 Gbits of compressed images per day, the largest of any ESA mission to date, producing at the end more than tens of petabytes of reduced data. “Given the complexity of the infrastructure and the pressure in analysing the data as fast as possible, the support and expertise of CERN is of high relevance,” says Luca Valenziano, Euclid Consortium representative at CERN. “The data will be processed in a distributed infrastructure of nine data centres. CERN provides the means to efficiently deploy the software to these data centres using CVMFS (CernVM File System), and will continue to support the Euclid SGS in this way during its mission lifetime.”

CERN’s involvement is not limited to a technological contribution, as theoretical physics at the Laboratory has strong ties with the science of Euclid. “The exact properties of galaxy density fluctuations depend on the entire history of the Universe, and cosmologists at CERN have been working on developing theoretical frameworks to predict them,” explains Marko Simonović, from CERN’s Theoretical Physics department. “Tools developed among other places at CERN will be used by the Euclid collaboration to make comparisons of data and theory and test theories beyond standard models of cosmology and particle physics. Any new discovery in cosmology would indirectly be a new discovery in particle physics.”

CERN is part of the Euclid consortium, an organisation that brings together about 2000 scientists in 300 laboratories in 17 different countries in Europe, USA, Canada and Japan. It is responsible for designing and building the NISP and VIS instruments, for gathering all ground-based complementary data, developing the survey strategy and the data processing pipeline to produce all calibrated images and catalogues and the scientific exploitation of the data.

Read more:

- Euclid to link the largest and smallest scales (CERN Courier)

- Euclid mission page (ESA)

- Euclid consortium website